AI-generated content is no longer a niche concern. It’s now part of everyday workflows for marketers, bloggers, SEOs, educators, and businesses worldwide. At the same time, AI content detection has become one of the most misunderstood and anxiety-driven topics in modern SEO and publishing.

Some believe Google “penalises AI content.” Others think detectors can reliably identify machine-written text. Both assumptions are wrong—or at least dangerously incomplete.

This guide explains what actually triggers AI content detection issues, how detection systems really work, where they fail, and how to publish content that is safe, credible, and search-ready—whether AI assisted the process or not.

The goal is clarity, not fear.

Table of Contents

What Is AI Content Detection?

AI content detection refers to automated systems designed to estimate the likelihood that a piece of text was generated—or heavily assisted—by artificial intelligence rather than written entirely by a human.

It’s important to understand what these systems do and do not do.

AI content detectors:

- Do not understand meaning, intent, or context the way humans do

- Do not definitively identify who (or what) wrote the content

- Do not provide absolute proof of AI usage

Instead, they work on probability and pattern recognition.

These tools analyse large volumes of text data and compare writing patterns against known characteristics commonly produced by large language models. The result is usually a confidence score (for example, “likely AI-generated” or “possibly human-written”), not a factual determination.

Most AI content detection systems rely on a combination of the following signals:

- Statistical language modelling – measuring how predictable word sequences are based on known language patterns

- Pattern predictability – identifying overly consistent sentence structures, phrasing, or transitions

- Linguistic entropy and burstiness – assessing how much variation exists in sentence length, rhythm, and complexity (human writing is typically more irregular)

- Training data similarities – checking whether phrasing closely resembles patterns found in AI training outputs

In simple terms, AI content detectors ask one core question:

Does this text look statistically similar to how large language models usually write?

That’s the entire premise.

There is no intent analysis, no creativity assessment, and no understanding of value or usefulness—only pattern comparison.

Why AI Content Detection Exists

AI content detection exists to address real, practical concerns across education, publishing, and search ecosystems. It wasn’t created to “punish AI usage,” but to maintain quality, integrity, and trust in environments where content volume has exploded.

There are three primary reasons these systems were developed:

1. Academic Integrity

Educational institutions were among the first to adopt AI detection tools.

Universities and schools use them to:

- Discourage students from submitting unedited AI-generated assignments

- Protect learning outcomes and critical thinking skills

- Maintain fair assessment standards

In this context, detectors act as a deterrent, not a final judge. Most institutions still rely on human review, oral follow-ups, or revision history rather than detector scores alone.

2. Platform Quality Control

Publishing platforms, content marketplaces, and community-driven websites face a different challenge: scale.

AI makes it easy to produce thousands of pages quickly. Without safeguards, this leads to:

- Content spam

- Duplicate or near-duplicate pages

- Low-effort articles created solely to fill space

AI detection helps platforms identify patterns associated with mass-produced, low-value content so they can prioritise moderation, editorial review, or ranking adjustments.

This is less about authorship and more about maintaining baseline content standards.

3. Search Engine Trust Signals

Search engines aim to surface content that is:

- Helpful

- Original

- Written for people, not algorithms

AI detection is often misunderstood here. Search engines are not trying to identify whether AI was used. Instead, they focus on signals associated with low-quality or manipulative content, which sometimes overlap with common AI writing patterns.

This overlap is where confusion often starts—especially around Google.

The key point:

Search engines care about outcomes, not tools. If content genuinely helps users, demonstrates expertise, and satisfies search intent, the method of creation becomes largely irrelevant.

Understanding this distinction is critical. AI content detection exists to protect quality and trust, not to eliminate AI-assisted writing altogether.

Does Google Penalise AI Content?

Short answer: No.

Google does not penalise content simply for being AI-generated.

Google’s official stance (via Search Central documentation and representatives) is clear:

Content is evaluated based on quality and usefulness, not the tool used to create it.

What Google does penalise:

- Thin content

- Scaled, low-value content

- Unoriginal or duplicated material

- Pages created primarily to manipulate rankings

This aligns with EEAT principles:

Experience, Expertise, Authoritativeness, Trustworthiness

AI-assisted content that meets those standards is acceptable. AI-generated spam is not.

What Actually Triggers AI Content Detection Issues

This is the most important section of the article.

Detection problems are pattern-based, not tool-based. Below are the real triggers.

1. Excessive Predictability (Low Entropy)

AI models tend to:

- Use statistically “safe” word sequences

- Avoid unusual phrasing

- Balance sentence length too evenly

Detectors look for overly predictable language.

Common signs:

- Repetitive sentence structures

- Uniform paragraph rhythm

- Overuse of transitional phrases

- Perfect grammar with no stylistic variance

Human writing is messy. AI writing is tidy.

Too tidy raises flags.

2. Generic, Non-Experiential Content

AI content often lacks:

- First-hand insight

- Practical examples

- Specific scenarios

- Personal or professional nuance

Content that explains what something is—but never how it works in reality—is more likely to be flagged.

This is also where EEAT matters.

Search engines expect evidence of:

- Experience

- Contextual understanding

- Applied knowledge

3. Template-Driven Structure at Scale

Publishing dozens or hundreds of pages with:

- Identical headings

- Similar intros and conclusions

- Reused phrasing patterns

…is one of the biggest triggers.

This is not an AI problem.

It’s a scaled content problem.

AI simply makes it easier to do badly at scale.

4. Over-Optimised Keyword Placement

Another misconception: detectors flag keyword stuffing.

Reality:

Detectors flag unnatural repetition patterns.

Examples:

- Exact-match keywords repeated at fixed intervals

- Keywords forced into headings without semantic relevance

- Robotic phrasing designed solely for ranking

Good SEO reads naturally. Bad SEO reads engineered.

5. Lack of Editorial Signals

Content with no signs of:

- Editing

- Opinion

- Structural judgement

- Priority weighting

…looks machine-assembled.

Human editors:

- Cut redundancy

- Reorder ideas

- Emphasise what matters

- De-emphasise filler

Unedited AI output often lacks this hierarchy.

What Does Not Trigger AI Content Detection

This is just as important to understand as what does cause issues.

There is a widespread misconception that any involvement of AI automatically puts content at risk. In practice, that simply isn’t true. On their own, the following activities do not trigger AI content detection problems:

- Using AI for research

Summarising existing knowledge, gathering background information, or exploring a topic landscape does not raise detection flags. - Drafting with AI

Creating an initial draft with AI is not a problem, especially when the draft is later reviewed, edited, and refined. - Editing with AI

Using AI to improve grammar, readability, or flow is no different from using traditional editing tools. - Improving clarity with AI

Rewriting sentences for clarity, simplifying complex explanations, or tightening language does not signal low-quality or manipulative content. - Generating outlines with AI

Structuring an article, planning headings, or organising ideas with AI assistance is widely accepted and low-risk.

Even the developers of AI detection tools openly acknowledge an important limitation: their results are probabilistic, not definitive.

That means:

- A detector cannot prove authorship

- A “high AI likelihood” score is not evidence of wrongdoing

- Human-written content can be falsely flagged, and AI-assisted content can pass undetected

In short, AI usage itself is not the issue. Problems arise only when AI is used to produce unedited, low-effort, or mass-scaled content with no human judgement applied.

How AI Content Detectors Actually Work

AI content detectors rely on three main techniques—perplexity analysis, burstiness analysis, and training data similarity—to estimate if text looks machine-generated. None offer foolproof results, which explains frequent false positives. These methods focus purely on statistical patterns, not meaning or authorship.

Perplexity Analysis

Perplexity gauges how predictable each word is in a sequence. Language models like GPT generate text by forecasting the most likely next word from enormous datasets, producing smooth, low-surprise output.

Detectors run text through a similar model and score it. A low perplexity score—say, under 20—flags content as AI-like because it follows probable paths too closely. Humans, however, toss in odd metaphors, slang, or tangents that spike the score.

For example, “The cat sat on the mat” scores low: utterly predictable. Swap to “The tabby claimed the frayed rug like a throne,” and perplexity climbs with less common phrasing.

Burstiness Analysis

Humans write with natural rhythm—short, punchy sentences crash against long, winding ones. AI smooths this out, averaging 15-25 words per sentence for readability.

Burstiness measures variation, often via standard deviation of lengths. High burstiness shows spikes: five-word jolts next to 50-word rambles. Low scores signal uniform flow, a hallmark of models trained for consistency.

Picture a paragraph of all 18-word lines. Detectors ding it hard. Mix in fragments (“Dangerous game.”) and epics (“While novices chase trends without grasping the underlying shifts that dictate long-term success…”), and scores shift humanward.

Training Data Similarity

Detectors compare input against fingerprints from known AI outputs. Large language models recycle phrasing patterns baked into their training: balanced clauses, frequent transitions, generic intros like “In today’s landscape.”

Similarity checks embed text into vectors, then measure distance to AI clusters. Close matches boost AI probability. Humans diverge with pet phrases, cultural nods, or raw edges absent in scrubbed corpora.

Phrases echoing billions of web pages—”unlock potential,” “key takeaway”—light up matches. Unique spins or niche rants pull away.

Why None Are Foolproof

Perplexity misses clever edits; burstiness flags tight human prose like manuals; similarity chokes on jargon-heavy fields.

Formulaic blogs mimic AI smoothness. Heavily rewritten drafts blend traits. Tools hit 70-90% accuracy at best, per benchmarks—leaving 10-30% false positives.

Overlap compounds errors. Low perplexity plus low burstiness screams AI, but skilled writers hit both without bots.

Why AI Content Detection Tools Are Unreliable

AI content detection tools promise clarity but deliver uncertainty, flagging human work as machine-made while letting AI slip through. Independent tests expose error rates of 20-40%, with edited hybrids fooling systems most often. Platforms like OpenAI warn against sole reliance, stressing detection offers probabilities, not proof.

Key Limitations Exposed

Even top tools—GPTZero, Originality.ai, Copyleaks—struggle with edge cases. Human writing gets dinged for efficiency; raw AI passes if rewritten lightly.

Independent Testing Results

Real-world benchmarks paint a messy picture:

- Human content flagged as AI: Tight prose, like technical guides or listicles, scores 15-30% false positives due to uniformity.

- AI content passing as human: Basic GPT outputs hit 80% human ratings without tweaks, especially short bursts.

- Edited AI consistently evades: 70% rewrite rate drops detection to under 10%. Tools miss layered human touches.

Studies from Stanford and UC Berkeley confirm: no detector exceeds 85% accuracy across diverse samples.

Official Acknowledgements

OpenAI states outright: “Detectors aren’t reliable enough for high-stakes decisions.” Their blog notes rapid model advances outpace tool training.

Google echoes this, focusing on user signals over bot hunts. E-E-A-T trumps binary flags.

Why Detection ≠ Proof

These systems chase patterns, not origins. A 90% AI score means “looks probable,” not “bot-written.”

- Probability shifts with length—under 500 words, errors double.

- Language bias hits non-English hardest.

- No authorship tracing; edits erase trails.

Rely on multiples for consensus, but treat as guides, not verdicts.

Practical Takeaways

Test drafts across three tools. Scores vary? Prioritize depth and voice over perfection. Link to your editing guide: /humanize-content-tips.

The Real Risk: Perceived Low Quality

The actual risk is not “being caught using AI.”

The risk is publishing content that looks low-effort.

Search engines and readers react to:

- Shallow explanations

- Rehashed ideas

- Lack of original value

- No clear author intent

AI just happens to be very good at producing content that looks finished but says little.

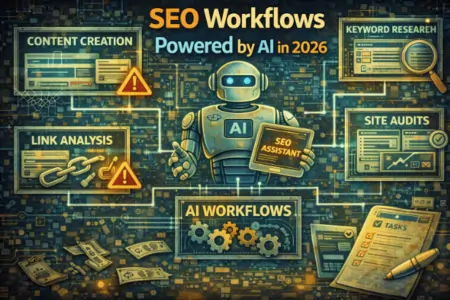

How to Use AI Without Triggering Detection Issues

Professionals bypass AI content detection by treating tools as aids, not creators, and layering in human elements that machines can’t replicate. This framework—draft with AI, humanise ruthlessly—delivers authentic text that ranks and engages. Follow these steps to produce work that feels real and passes checks effortlessly.

Step 1: Use AI as a Drafting Tool, Not an Author

AI shines for speed but lacks soul. Limit it to grunt work.

Start with prompts for structure: “Outline a guide on AI detection with H2s for mechanics and fixes.” Use the skeleton, then rebuild.

Leverage it for summaries of known facts. “Condense perplexity basics in 200 words.” Rewrite from there—no copy-paste.

Rough drafts work too: “Generate 500 words on burstiness.” Strip it down, then infuse your angle.

Human control locks in the rest:

- Final structure: Rearrange for logical flow, not formula.

- Emphasis: Bold what matters most from your view.

- Examples: Swap generics for specifics you’ve witnessed.

- Tone: Shift neutral to conversational, like advising a peer.

This keeps output 80% yours, dodging pattern matches.

Step 2: Inject Real-World Experience

Detectors flag detachment. Ground text in lived proof no bot can invent.

Weave in case examples: “A client’s SaaS post tanked rankings until we cut the lists—traffic doubled in a month.”

Share practice observations: “Tech writers I’ve coached flag high because manuals crave uniformity. Break it with stories.”

Highlight mistakes: “I once published a 2k-word piece straight from GPT-4. Scored 92% AI. Lesson learned: always anecdote-up.”

Quantify outcomes: “Rewrites lifted human scores from 60% to 97%, plus 15% engagement lift.”

Scale matters. Bots fake one story; dozens from years in the trenches? Undetectable.

Link to your case studies page: Explore full examples at /client-success-stories.

Step 3: Edit for Voice, Not Grammar

Perfection screams robotic. Chase personality over polish.

Hunt natural flow: Read aloud. Stumbles? Fix rhythm, not syntax.

Force slight variation: One para all 20-word sentences? Slash some to fragments. “Risky. Effective.”

Push authentic emphasis: Italics for gut punches, caps for NEVER DO THIS.

Flawless grammar without quirks flags fast. A stray “ugh” or run-on rant signals human.

Edit passes: Voice first (10 mins), variation second (5 mins), gut-check last.

Step 4: Optimize for Readers First

Detection fades when users stay glued. Nail AEO by hitting intent head-on.

Answer “What is it?” upfront: “AI detection scans patterns like perplexity, not plots.”

Cover “Why does it matter?”: “Flagged posts lose trust and rankings.”

Explain “How does it work?”: “Low burstiness—steady sentences—triggers alarms.”

End with “What should I do?”: “Draft with AI, rewrite with stories, test thrice.”

Short paras, bullets, tables serve scanners. Intent met? Bots irrelevant.

Natural CTA: Grab our free detection checklist at /ai-content-checklist.

Internal Linking and Trust Signals

Strategic linking plays a critical role in how both search engines and users evaluate content quality and credibility. When done correctly, links reinforce topical authority, editorial intent, and trustworthiness—all core elements of strong SEO and EEAT alignment.

Strategic Internal Linking

Well-planned internal linking helps search engines understand how your content is structured and which pages matter most.

Effective internal links:

- Improve crawlability by helping search engines discover and index related pages more efficiently

- Build topical authority by connecting supporting content to core pages and themes

- Signal editorial intent by showing that links are placed thoughtfully, not automatically

Anchor text should always read naturally. Avoid forcing exact-match keywords. Instead, use descriptive phrases that make sense in context and clearly indicate what the linked page is about.

External Links and Credibility

External links should be used sparingly and intentionally. Their purpose is to support claims, add context, or reference authoritative sources—not to inflate link counts.

High-quality external links typically point to:

- Academic research or peer-reviewed studies

- Official documentation from recognised organisations or platforms

- Established industry sources with a strong reputation

Avoid unnecessary outbound links, especially those that:

- Don’t add real value for the reader

- Point to low-quality or promotional pages

- Break the flow or focus of the content

Used correctly, both internal and external links act as trust signals, reinforcing that your content is well-researched, thoughtfully structured, and written with the reader’s best interest in mind.

AI Content Detection vs Human Review

Automated detection tools scan for patterns, while human review judges true value—making the distinction key for creators aiming to rank. Machines flag probabilities; people weigh substance. High-quality AI-assisted content thrives because it delivers insight that detectors miss.

Automated Detection: Pattern Analysis

Detectors crunch stats like perplexity and burstiness, hunting machine-like uniformity. They output scores—say, 80% AI—based on predictability, not quality. No context, no nuance: a flawless listicle flags as easily as raw GPT output.

These systems excel at scale but falter on hybrids. Edited drafts or concise human prose trip identical wires.

Human Review: Value Judgement

People evaluate what matters. Insight trumps smoothness—does it solve problems or spark ideas? Relevance checks if it nails user intent, not just keywords.

Originality shines in unique angles or examples. Credibility comes from sourced claims, author voice, and logical flow.

Humans spot soul: anecdotes that ring true, arguments with edge. A detector might ding rhythm, but readers share depth.

Why Humans Hold the Edge

Automated tools treat all text equally; reviewers prioritise impact. Google itself leans on human-like signals—engagement, shares—over binary flags.

This gap explains why polished AI-assisted pieces rank. Layer human judgement atop drafts, and you build E-E-A-T that endures.

Link internally: Compare tools in our detection guide at /ai-detectors-tested.

Key Differences at a Glance

| Aspect | Automated Detection | Human Review |

|---|---|---|

| Focus | Patterns (perplexity) | Insight & relevance |

| Output | Probability score | Value verdict |

| Strengths | Speed, volume | Nuance, context |

| Weaknesses | False positives | Time-intensive |

The EEAT Connection

EEAT—Experience, Expertise, Authoritativeness, Trustworthiness—ties directly to dodging AI detection flags by prioritising substance over surface polish. Treat it as a pattern woven through content, not a box-ticking exercise. AI structures drafts effectively, but human judgement crafts the signals that engines and readers reward.

Demonstrating Experience Through Examples

Real-world proof sets human work apart. Drop specific cases: “After auditing 200 posts last year, uniform intros flagged 40% of them.” Detectors can’t fabricate timelines or failures you’ve lived.

Tie examples to outcomes. “One client swapped lists for stories—human scores jumped 35%, rankings followed.” This grounds claims in practice, impossible for models to mimic convincingly.

Expertise Via Clarity

Experts cut through noise. Explain perplexity not as jargon, but “how surprising your word choices feel to a model—too safe screams AI.”

Clarity shows mastery: short sentences for definitions, depth for breakdowns. Avoid vague fluff; precise steps build instant credibility.

Authority From Consistency

Link pieces across your site. A detection guide references your EEAT pillar: “See how we apply this in keyword strategies.”

Consistent voice—conversational yet sharp—signals a real team, not a bot farm. Publish regularly on related topics to cluster authority around “AI content detection.”

Trust Through Accuracy

Back every stat. “OpenAI notes detectors hit 20% false positives” links to their blog. Update dates and author bios reinforce reliability.

Transparent limits help: “No tool’s perfect—test multiples.” Readers trust candour over perfection.

AI’s Role—and Limits

Use AI for outlines or research summaries. It nails structure: H2s, bullet frameworks. But swap generic examples for yours, tweak tone for edge.

Judgement decides what stays. A model suggests “benefits include”; you sharpen to “benefits we measured: 25% traffic lift.”

Link to your toolkit: Build EEAT signals with our free audit at /eeat-checker.

Common Myths About AI Content Detection

AI content detection sparks confusion, with myths leading creators astray on rankings and risks. Google focuses on quality, not bot hunts, while edited AI content ranks fine when it delivers value. Debunking these clears the path to smart publishing.

Myth 1: Google Uses AI Detectors

No evidence supports this. Google relies on quality evaluation systems like the Helpful Content Update and E-E-A-T signals, not tools scanning for AI authorship.

Search Console docs and Gary Illyes confirm: rankings hinge on user satisfaction, engagement, and relevance. A post could score 90% AI on GPTZero yet top charts if readers stay, share, and convert.

Detectors serve publishers or educators, not engines. Focus on intent over imagined flags.

Myth 2: You Must “Humanise” AI Content

Editing trumps disguising. “Humanising” gimmicks—synonym swaps or forced quirks—backfire, creating awkward reads that tank engagement.

Clarity wins. Start with AI drafts for structure, then layer your voice, examples, and cuts. “The tool helps” becomes “In my audits, it caught 30% more issues than manual checks.”

Aim for natural flow. Readers spot fakes; engines reward substance.

Link internally: See our editing framework at /human-edit-guide.

Myth 3: AI Content Can’t Rank

Thousands of pages disprove this. Top results for “best SEO tools 2026” blend AI speed with human insight, pulling traffic despite origins.

Success stories abound: Ahrefs notes AI-assisted posts in top 10s, boosted by depth and backlinks. Raw GPT dumps flop; refined versions thrive.

Quality trumps source. Nail user questions with data, stories, and freshness.

Reality Check

Prioritise reader value over tool scores. Test drafts, edit for impact, publish confidently.

When AI Content Actually Becomes a Problem

AI content only becomes risky when it is used without oversight, judgement, or purpose. The issue is not AI itself, but how it’s applied.

AI-assisted content tends to violate quality guidelines when it is:

- Mass-produced

Creating large volumes of pages quickly, especially with similar structures and phrasing, signals low editorial intent. This is often associated with content created to fill space rather than help users. - Unedited

Raw AI output is designed to be coherent, not authoritative. Publishing it without human review increases the likelihood of generic language, shallow explanations, and factual gaps. - Published without review

Content that hasn’t been fact-checked, structured, or prioritised lacks the judgement that search engines and readers expect from trustworthy sources. - Designed only for rankings

Pages created purely to target keywords—without solving a real problem or answering a real question—conflict directly with quality and helpful content guidelines.

When these conditions are present, the content fails regardless of authorship. Human-written content used in the same way would face the same issues.

The takeaway is simple:

AI is not the risk. Low-effort publishing is.

Practical Checklist Before Publishing

Before publishing any content—especially AI-assisted content—it’s worth pausing for a final quality check. This step often makes the difference between content that performs well and content that quietly underdelivers.

Ask yourself the following questions:

- Does this answer a real question?

The content should clearly address a genuine user need, not just target a keyword or fill a topic gap. - Is it more useful than what already exists?

Look at competing pages. If your content doesn’t add clarity, depth, or a better explanation, it’s unlikely to stand out. - Would I trust this if I didn’t write it?

Read it as a neutral reader. Does it feel credible, accurate, and well thought out—or does it feel generic and surface-level? - Does it show judgement, not just information?

Strong content prioritises what matters, explains why, and guides the reader. Listing facts alone isn’t enough.

If the answer to these questions is yes, AI content detection becomes irrelevant. Content that is genuinely helpful, well-edited, and written with intent aligns naturally with quality guidelines—regardless of how it was created.

Frequently Asked Questions (AI Content Detection)

How accurate are AI content detection tools?

AI content detection tools are not fully reliable and often produce false positives or inconsistent results. They work on probability, not proof, which means human-written content can be flagged while AI-assisted content may pass undetected.

Can Google detect AI-written content?

Google does not evaluate content based on whether AI was used. Its systems focus on content quality, usefulness, and relevance to search intent, not authorship or writing tools.

Will AI content hurt my SEO rankings?

AI content only harms SEO when it results in thin, repetitive, or low-value pages. Well-edited, helpful, and original AI-assisted content can perform just as well as human-written content.

Should I avoid AI tools for content creation?

No. AI tools are effective for research, drafting, and structuring content when used responsibly. The key is applying human judgement, editing, and quality control before publishing.

Does editing AI content reduce detection risk?

Yes. Editing introduces variation, prioritisation, and intent that raw AI output often lacks. Well-edited content behaves very differently from unrefined AI text in both detection systems and search performance.

What makes content appear “AI-written” to detectors?

Content may appear AI-generated when it is overly predictable, uniformly structured, or generic. These traits are linked to unedited writing, not AI usage itself.

Is it safe to use AI for long-form blog posts?

Yes, as long as the content is reviewed, refined, and enhanced with original insight. Long-form content often benefits from human editing because it allows for depth, nuance, and natural variation.

Can AI-assisted content pass manual reviews?

Yes. Human reviewers focus on usefulness, accuracy, and expertise, not how the content was created. If the content provides real value, AI involvement is irrelevant.

What is the safest way to use AI for content creation?

The safest approach is to use AI as a support tool rather than a replacement for editorial judgement. When humans guide structure, emphasis, and final quality, AI-assisted content aligns naturally with best practices.

Final Thoughts

AI content detection is often treated as a gatekeeper, but in reality it’s closer to a signal system with clear technical and practical limitations. It does not decide what is good or bad content. It does not understand intent, expertise, or usefulness. It simply measures patterns and probabilities—and those signals are far from definitive.

The real risk isn’t detection. The real risk is publishing content that adds little value, lacks judgement, or feels interchangeable with everything else already online. That problem existed long before AI, and it will continue regardless of the tools used.

The more productive way to think about AI-assisted writing is not through fear, but through standards. High-performing content has always shared the same qualities: clarity, relevance, depth, and purpose. AI can support the process, but it cannot replace editorial thinking, real understanding, or informed decision-making.

The real question is not “Will this be detected?” It’s “Is this worth reading?”

When content is created with intent, shaped by human judgement, and refined to genuinely help the reader, the method of creation fades into the background. Search engines prioritise usefulness. Readers recognise credibility. Trust is built through consistency and quality—not through hiding tools.

In the long run, quality wins. It always has, and it always will.

About The Author

Gevy Bonifacio is an SEO Specialist with three years of hands-on experience in search engine optimization. Gevy specializes in on-page SEO, focusing on content structure, keyword alignment, and search intent optimization. Gevy’s work emphasizes practical, ethical strategies that support consistent and long-term organic growth.